2 CS 189 Discussion 02: Machine Learning Design

2.0.1 Contact Information

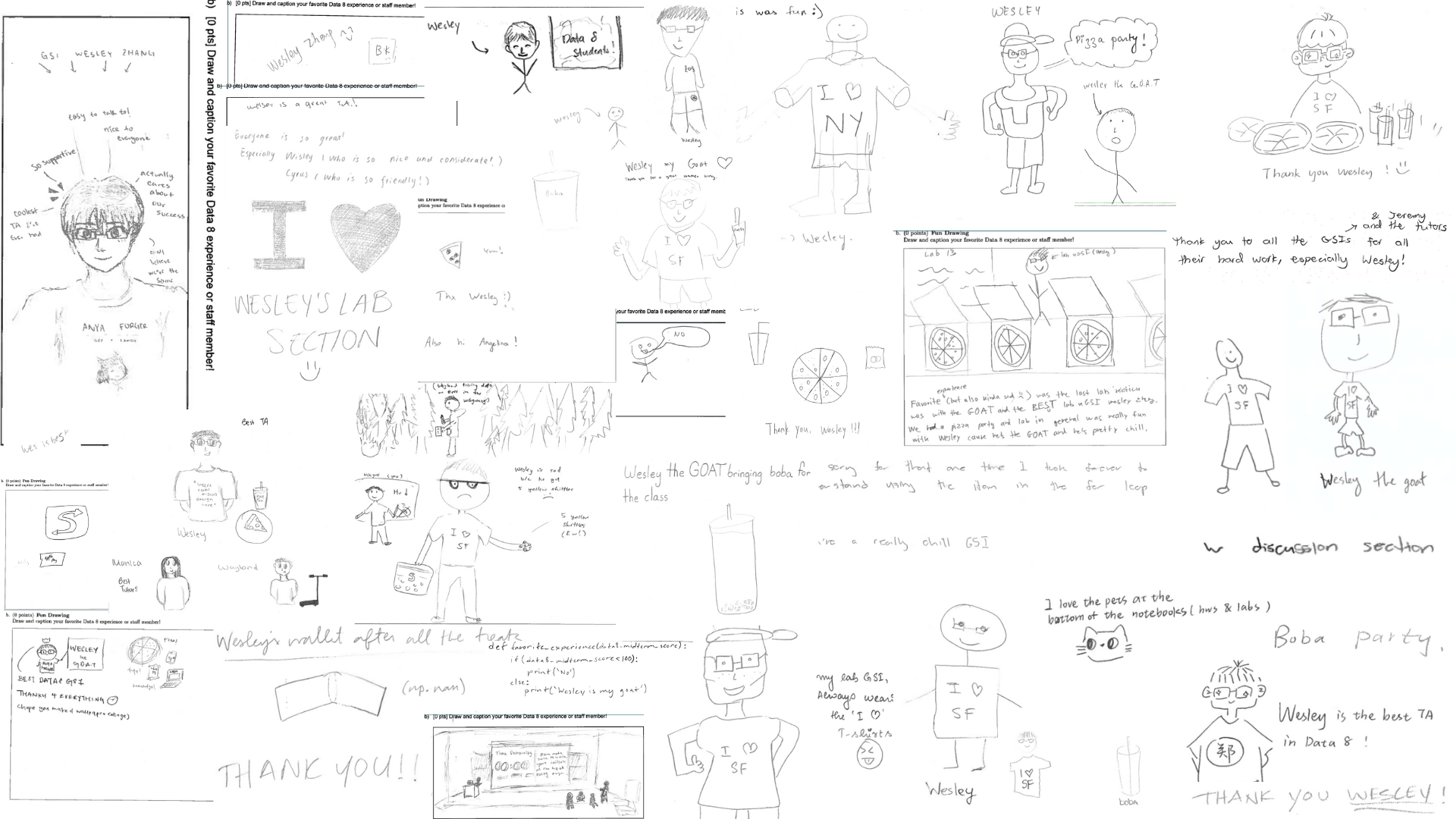

| Name | Wesley Zheng |

| Pronouns | He/him/his |

| wzheng0302@berkeley.edu | |

| Discussion | Wednesdays, 11–12 PM @ Wheeler 120 & Wednesdays, 3-4 PM @ Hildebrand Hall B51 |

| Office Hours | Tuesdays, 11–1 PM @ Cory Courtyard |

2.1 Linear Algebra

A symmetric matrix \(A\in \mathbb{R}^{n\times n}\) is if for all \(x\in\mathbb{R}^n\), \(x^\top A x \geq 0\). Show that \(A\) is positive semi-definite if and only if all eigenvalues of \(A\) are non-negative.

Linear algebra underpins modern machine learning by providing the language to represent data, transformations, and optimization objectives.

Orthonormal / Orthogonal Matrices

- A matrix \(A\) is orthonormal (orthogonal) if

\[ A^T = A^{-1}, \quad \text{equivalently } A^T A = I. \]

- Columns (and rows) form an orthonormal basis.

- Preserve lengths and angles: \(\|Ax\|_2 = \|x\|_2\).

Spectral Theorem

- Any real symmetric matrix \(A\) can be decomposed as

\[ A = Q \Lambda Q^T, \]

where \(Q\) is orthogonal and \(\Lambda\) is diagonal.

- Diagonal entries of \(\Lambda\) are real eigenvalues; columns of \(Q\) are orthonormal eigenvectors.

- Central to PCA and quadratic forms.

Singular Value Decomposition (SVD)

- Any matrix \(A \in \mathbb{R}^{m \times n}\) can be written as

\[ A = U \Sigma V^T, \]

where \(U\) and \(V\) are orthogonal and \(\Sigma\) is diagonal with nonnegative entries (singular values).

- Works for all matrices (not just symmetric).

- Used in dimensionality reduction, low-rank approximation, and numerical stability.

Basis

- A basis is a set of vectors that is

- Linearly independent, and

- Spans the vector space.

- Linearly independent, and

- Every vector in the space can be written uniquely as a linear combination of basis vectors.

Norms

- A norm assigns a nonnegative length to vectors and satisfies positivity, homogeneity, and the triangle inequality.

Common Vector & Matrix Norms

\(\ell_2\) norm (Euclidean):

\[ \|x\|_2 = \sqrt{\sum_i x_i^2} \]

Standard geometric length.\(\ell_1\) norm:

\[ \|x\|_1 = \sum_i |x_i| \]

Encourages sparsity; used in Lasso.\(\ell_\infty\) norm:

\[ \|x\|_\infty = \max_i |x_i| \]

Measures the largest coordinate magnitude.Frobenius norm (matrices):

\[ \|A\|_F = \sqrt{\sum_{i,j} A_{ij}^2} \]

Equivalent to applying \(\ell_2\) to all entries.Spectral norm (operator norm):

\[ \|A\|_2 = \max_{\|x\|_2=1} \|Ax\|_2 \]

Equal to the largest singular value of \(A\); measures maximum amplification.

Answer

Since \(A\) is symmetric, by the Spectral Theorem, there exists an orthonormal basis of \(\mathbb{R}^n\) consisting of eigenvectors \(v_1,\dots,v_n\) of \(A\), where the corresponding eigenvalues \(\lambda_1,\dots,\lambda_n\) are all real-valued.

Assume \(A\) is positive semi-definite. Then, for all eigenvectors \(v_i\), we have \(v_i^\top A v_i \geq 0\). Noting that \(A v_i = \lambda_iv_i\), this implies that \(v_i^\top (\lambda_iv_i) = \lambda_i (v_i^\top v_i) = \lambda_i \geq 0\), where we use that \(v_i^\top v_i = 1\) since \(v_i\) has unit norm.

Assume all \(\lambda_i\geq 0\). Consider an arbitrary \(x\in \mathbb{R}^n\). Since the \(v_i\) form a basis of \(\mathbb{R}^n\), there exist scalars \(\alpha_i\in\mathbb{R}\) such that \(x = \sum_{i=1}^n \alpha_i v_i\). Thus, \[ \begin{align*} x^\top A x &= \left(\sum_{i\in [n]} \alpha_i v_i\right)^\top A \left(\sum_{j\in [n]} \alpha_j v_j\right) \\ &= \sum_{i\in [n]} \sum_{j\in [n]} \alpha_i\alpha_j v_i^\top A v_j \\ &= \sum_{i\in [n]} \sum_{j\in [n]} \alpha_i\alpha_j v_i^\top (\lambda_j v_j) \\ &= \sum_{i\in [n]} \sum_{j\in [n]} \alpha_i\alpha_j\lambda_j (v_i^\top v_j) \\ &= \sum_{i\in [n]} \alpha_i^2\lambda_i, \end{align*} \] where we use that \(v_i^\top v_j = 0\) for \(i\neq j\) and \(v_i^\top v_i = 1\) in the final equality. Since the \(\alpha_i\) are real and all \(\lambda_i\geq 0\), this implies that \(x^\top A x \geq 0\).

2.2 Probability

The probability density function (PDF) of a random variable \(U\sim \text{Unif}[0,1]\) is \[f(t) = \begin{cases} 0, &t < 0,\\ 1, &0 \leq t < 1, \\ 0, &t\geq 1. \end{cases}\] Let \(X,Y,Z\) be independent random variables \(\sim \text{Unif}[0,1]\). Find the PDF of \(M = \min(X,Y,Z)\).

Probability distributions describe how likely different outcomes are, forming the foundation for modeling uncertainty in machine learning.

Uniform Distribution

- All outcomes in a given interval have equal probability.

- Continuous case: \(x \sim \text{Uniform}(a,b)\),

\[ f(x) = \frac{1}{b-a}, \quad a \le x \le b \]

Bernoulli Distribution

- Models a binary outcome (0 or 1) with success probability \(p\).

- \(X \sim \text{Bernoulli}(p)\),

\[ P(X=1) = p, \quad P(X=0) = 1-p \]

Binomial Distribution

- Counts the number of successes in \(n\) independent Bernoulli trials.

- \(X \sim \text{Binomial}(n, p)\),

\[ P(X=k) = \binom{n}{k} p^k (1-p)^{n-k}, \quad k=0,1,\dots,n \]

Gaussian (Normal) Distribution

- Continuous, symmetric bell curve defined by mean \(\mu\) and variance \(\sigma^2\).

- \(X \sim \mathcal{N}(\mu, \sigma^2)\),

\[ f(x) = \frac{1}{\sqrt{2\pi\sigma^2}} \exp\Big(-\frac{(x-\mu)^2}{2\sigma^2}\Big) \]

Multinomial Distribution

- Generalization of Bernoulli to multiple discrete classes.

- Counts outcomes of \(n\) independent trials over \(k\) classes with probabilities \(p_1,\dots,p_k\).

- \(X \sim \text{Multinomial}(n, \mathbf{p})\),

\[ P(X_1=x_1, \dots, X_k=x_k) = \frac{n!}{x_1! \cdots x_k!} \prod_{i=1}^k p_i^{x_i}, \quad \sum_i x_i = n \]

Answer

The cumulative distribution function (CDF) \(F(t) = \Pr[U \leq t]\) of \(U\) is \[\begin{align*} F(t) = \int_{-\infty}^t f(s)\ ds = \begin{cases} 0, &t < 0,\\ t, &0\leq t < 1,\\ 1, &t \geq 1. \end{cases} \end{align*}\] By definition, all of \(X,Y,Z\) have PDF \(f\) and CDF \(F\). Let \(F_M\) denote the CDF of \(M\). For \(t\in\mathbb{R}\), \[\begin{align*} F_M(t) &= \Pr[M \leq t] \\ &= 1 - \Pr[M > t] \\ &= 1 - \Pr[X,Y,Z > t] \\ &= 1 - \Pr[X>t]\Pr[Y>t]\Pr[Z>t], \end{align*}\] where the final equality follows from the independence of \(X,Y,Z\). Thus, \[\begin{align*} F_M(t) &= 1 - (1 - \Pr[X\leq t])(1 - \Pr[Y\leq t])(1 - \Pr[Z\leq t]) \\ &= 1 - (1 - F(t))^3. \end{align*}\] Utilizing the definition of \(F(t)\) from above yields that \(M\) has CDF \[\begin{align*} F_M(t) &= \begin{cases} 0, &t<0, \\ 1 - (1-t)^3, &0\leq t < 1, \\ 1, &t\geq 1. \end{cases} \end{align*}\] Differentiation yields that \(M\) has PDF \[\begin{align*} f_M(t) &= \begin{cases} 0, &t<0,\\ 3(1-t)^2, &0\leq t <1, \\ 0, &t\geq 1. \end{cases} \end{align*}\]

2.3 Matrix Manipulation

Interpret what the following matrices achieve as linear transformations.

2.3.1 (a)

\(\begin{bmatrix} -1&0\\0&1 \end{bmatrix}\)

Answer

Consider the vector \((a,b)^\top\in\mathbb{R}^2\). Then, \[\begin{bmatrix} -1&0\\0&1 \end{bmatrix}\begin{bmatrix} a\\b \end{bmatrix} = \begin{bmatrix} -a\\b \end{bmatrix}.\] The first entry is negated, while the second entry is fixed. This corresponds to reflection about the line \(x = 0\) (i.e., the \(y\)-axis).2.3.2 (b)

\(\begin{bmatrix} 0&0&1\\0&1&0\\1&0&0 \end{bmatrix}\)

Answer

Consider the vector \((a,b,c)^\top\in\mathbb{R}^3\). Then, \[\begin{bmatrix} 0&0&1\\0&1&0\\1&0&0 \end{bmatrix}\begin{bmatrix} a\\b\\c \end{bmatrix} = \begin{bmatrix} c\\b\\a \end{bmatrix}.\] The entries of the vector are reversed. This corresponds to reflection about the plane \(x=z\).

Now, find matrices to accomplish the following transformations.

2.3.3 (c)

Rotate the plane of \(\mathbb{R}^2\) by \(\theta\) radians counterclockwise.

Answer

This represents a linear map \(\mathbb{R}^2\to\mathbb{R}^2\) that maps the basis vectors \(e_1\mapsto \cos\theta \cdot e_1 + \sin\theta\cdot e_2\) and \(e_2\mapsto -\sin\theta \cdot e_1 + \cos\theta \cdot e_2\). The corresponding matrix is \(\begin{bmatrix} \cos\theta &-\sin\theta\\ \sin\theta&\cos\theta \end{bmatrix}\).

(Note. This shows that \(\cos^2\theta + \sin^2\theta = 1\), since the determinant of this linear map must be \(1\).)

2.3.4 (d)

Rotate the plane of \(\mathbb{R}^2\) first by \(\theta\) radians counterclockwise, and then by an additional \(\phi\) radians counterclockwise.

Answer

We provide two solutions.

By Part (b), this matrix is \(\begin{bmatrix} \cos(\phi+\theta) &-\sin(\phi+\theta)\\ \sin(\phi+\theta)&\cos(\phi+\theta) \end{bmatrix}\).

As a composition, this linear map can be represented as the matrix product \[\begin{bmatrix} \cos\phi &-\sin\phi\\ \sin\phi&\cos\phi \end{bmatrix}\begin{bmatrix} \cos\theta &-\sin\theta\\ \sin\theta&\cos\theta \end{bmatrix} = \begin{bmatrix} (\cos\phi\cos\theta - \sin\phi\sin\theta) & -(\sin\phi\cos\theta+\cos\phi\sin\theta ) \\ (\sin\phi\cos\theta + \cos\phi\sin\theta)&(\cos\phi\cos\theta - \sin\phi\sin\theta) \end{bmatrix}.\]

(Note. This yields the angle-sum identities for \(\sin\) and \(\cos\), since the entries of the answers in both bullets must be equal.)

2.3.5 (e)

Add \(1\) to each entry of all elements of \(\mathbb{R}^2\).

Answer

First, note that this is a linear map. (An immediate reason is because the vector \((0,0)^\top\) is mapped to \((1,1)^\top\).) However, we can embed \(\mathbb{R}^2\) into \(\mathbb{R}^3\) by associating each vector \((a,b)^\top\in\mathbb{R}^2\) with the vector \((a,b,1)^\top\in\mathbb{R}^3\). Then, the matrix \(\begin{bmatrix} 1&0&1\\0&1&1\\0&0&1 \end{bmatrix}\) achieves our goal, since \[\begin{bmatrix} 1&0&1\\0&1&1\\0&0&1 \end{bmatrix}\begin{bmatrix} a\\b\\1 \end{bmatrix} = \begin{bmatrix} a+1\\b+1\\1 \end{bmatrix}.\] (Note. This motivates the use of in machine learning models.)

2.4 Machine Learning Taxonomy

We explore where current machine learning techniques fit into the machine learning taxonomy. Below is a list of machine learning solutions that are used for automated decision making today:

- PayPal Fraud Protection learns to recognize common fraud patterns to detect fraud.

- Amazon’s SageMaker groups customers for targeted marketing and recommendations.

- Zillow Zestimate estimates the market value for homes based on on-sale home prices.

- UCLA Health’s Epic model identifies the risk of patients for preventable hospital visits.

For each of these examples, decide where in our machine learning taxonomy the approach best fits. Briefly explain your reasoning for each.

Machine learning revolves around data, models, and optimization, connecting observed information to predictions and decisions.

Data

- Labeled data: Each example has a known output.

- Classification: Labels are categorical (e.g., spam vs. not spam).

- Regression: Labels are quantitative (e.g., house price).

- Classification: Labels are categorical (e.g., spam vs. not spam).

- Unlabeled data: No output labels; structure is inferred.

- Clustering: Group similar points.

- Dimensionality reduction: Position points in lower-dimensional space while preserving structure.

- Clustering: Group similar points.

Models

- Decision functions: Map inputs to predictions.

- Examples: linear, polynomial, logistic, neural networks.

- Examples: linear, polynomial, logistic, neural networks.

- Instance-based & tree models:

- Nearest neighbors, decision trees.

- Nearest neighbors, decision trees.

- Used for classification, regression, or representation learning.

Optimization Problem

- Defines how to learn model parameters.

- Components: variables, objective function, constraints.

- Types:

- Unconstrained optimization

- Convex programs

- Least squares

- PCA (principal component analysis)

- Unconstrained optimization

Optimization Algorithm

- Techniques to solve the optimization problem.

- Examples:

- Gradient descent – iterative minimization using gradients.

- Simplex – linear programming.

- SVD – used in low-rank approximations and PCA.

- Gradient descent – iterative minimization using gradients.

Answer

- PayPal Fraud Detection: PayPal uses historical transactions labeled as fraud/non-fraud to train cost-sensitive classifiers, so it relies on supervised learning.

- Amazon’s SageMaker: Amazon utilizes a clustering algorithm (an unsupervised learning technique) to group customers based on behavioral data such as purchase history, browsing patterns, and engagement metrics.

- Zillow Zestimate: Zillow uses ensembles of classical regression models to predict the market value of homes that are not on sale based on the sale prices of other homes on the market, so it relies on supervised learning.

- UCLA Health’s Epic: The Epic model is trained on patient health records and binary labels (did the patient have a preventable hospital visit?). The model then generates a probabilistic output that predicts whether a new patient is at risk of a preventable hospital visit. This is a form of supervised learning and, more specifically, binary classification.